The last few posts have focused on AV functional architecture and sensors. In today’s post, we finally come back to functional safety to talk about AV safety challenges. What makes AV safety hard?

Autonomous vehicles offer novel challenges to the traditional methods of safety engineering. As I write this, the current version of the IEC 61508 standard simply states that artificial intelligence (AI) approaches are “not recommended” for safety critical systems. This topic is actively being worked by the committee, but the gap remains.

This reality is changing, and AI safety is an active research area. AV technology is a relatively new and fast-developing domain. System safety for autonomous vehicles is an even newer sub-specialty in this domain. Several researchers have investigated the fundamental safety issues and challenges facing AV developers.

Autonomous Vehicle Safety

Below, we provide an overview of some of the most important and interesting research that attempts to identify and frame the current AV safety challenges.

Koopman and Wagner

Phil Koopman and Michael Wagner have been some of the most prolific researchers AV safety. One of their focuses has been to better define the novel challenges inherent in AV safety.

In their first overview paper on the topic, the infeasibility of complete testing is highlighted, including both black box and white box testing. The removal of the human driver requires the computer system to take over all exception handling, leading to a dramatic increase in complexity over current automotive systems such as ADAS. The increased complexity, combined with the stochastic nature of many AV algorithms, makes it difficult to validate that all safety requirements are known, correct, and unambiguous. Further, this ambiguity in requirements translates to an ambiguity in testing: How to determine a successful test when there is no unique correct system behavior?

In a later overview paper, Koopman and Wagner describe the multidisciplinary nature of the AV safety problem, where the AV development organization is a complex system with many interactions. Further, they highlight AV design, testing, and validation issues including the challenges of

- handling virtually infinite environmental situations

- validating inductive reasoning

- articulating unambiguous system requirements.

At the time, they believed that the effectiveness of the ISO 26262 standard for validating autonomous vehicles was still an open question. The fact that they were deeply involved in the development of the UL 4600 standard strongly suggests they ultimately answered the question in the negative. See the section below for additional discussion on the limitations of ISO 26262

RAND Corporation

In her prepared testimony to Congress, Nidhi Kalra of RAND highlights the inadequacy of current safety analysis methods for AVs. She stresses the assumption in current standards that a human operator is present to correct errors. Current standards work well when inputs, outputs, and failure modes are well-specified, but this presents a challenge for the large volumes (gigabytes per second) of complex, diverse sensor data in autonomous vehicles. She calls for a technology race to develop new methods for demonstrating and managing AV safety. Further, she emphasizes the requirement to rigorously and objectively validate the statistical soundness of AV testing, presumable using these new methods.

Safety Critical Systems Club

Rob Alexander, Rob Ashmore, and Andrew Banks provide a progress report to the SCSC on solving some of the challenges of AV safety. They too highlight the need for quantitative metrics for the effectiveness of AV safety testing. In particular, the detection of distributional shift between training, test, validation, and operational data is a difficult problem for machine learned systems.

Proposed architectural solutions face a difficult trade-off between allowing the AV freedom to operate and providing strong guarantees for safety. They also highlight several impractical approaches. Among them is the proposal to simply get enough AVs on the road to accumulate sufficient statistical evidence of safety. The lack of structure to the evidence means it cannot be subdivided, nor can it be updated when the environment or the AV system change.

NHTSA

In a NHTSA report assessing automotive safety standards, Qi Hommes (now with Zoox) highlights that existing standards do not adequately address environmental impacts over the vehicle lifecycle (e.g. periodic testing, degradation, etc.).

They also highlight the difficulty of obtaining statistically valid failure probabilities for ISO 262626 exposure and controllability assessment. They suggest that severity alone could be used as a risk measure for software. In my view, their repeated emphasis on “statistically valid probabilities” represents a distinctly frequentist view of probability. A Bayesian approach offers an attractive and practical alternative! (see my work below)

Hazard Analysis Case Study

Adedjouma et al provide a case study for applying ISO 26262 hazard analysis to an AV for public transportation. Among the issues they encountered was the difficulty in eliciting a complete list of operational situations, stating that no sufficient process currently exists. Similarly, the malfunctions elicitation for ISO 26262 may miss specification errors and emergent properties.

Further the the above challenges, machine learning algorithms require a statistical evaluation of accuracy during nominal operation, which is outside traditional safety paradigms. They also experienced bias in the selection of exposure, controllability, and severity factors, suggesting a new evaluation method is required. Finally, they suggest that quantitative methods and metrics need to be developed for autonomy.

Challenges of ISO 26262

A number of authors have discussed AV challenges specifically related to ISO 26262 and its shortcomings. One key shortcoming is that the standard limits its scope to only hazards caused by malfunctions of electrical, electronic, and programmable electronic equipment.

As noted in this study, the above definition excludes failures caused by human error, component interactions, environmental error, and other system failures in the absence of component failures. Others have noted that this definition also excludes misbehavior of machine learned (ML) algorithms. The standard offers no methods for the unique requirements of ML safety assurance.

Beyond the scope limitations of the ISO 26262 standard, others have also questioned the structural adequacy of the standard for application to AVs. Koopman et al build a case here and later here that current safety standards are inadequate for AV development, pointing to novel technologies and the absence of humans in the loop as key contributors to the complexity.

Further on structural adequacy, Martin et al question whether the ISO 26262 model of analyzing one function failure at a time is appropriate when the complex interactions blur the clear definition of functions.

Beyond Road Vehicles

Some researchers have taken a broader view of autonomous system safety. They seek to find commonality between autonomous systems in automotive, space, maritime, and other industries. The 2019 IWASS conference (and now the 2021 conference) sought to build cross-industry consensus through a series of papers and workshops.

Ramos et al highlight the strong interactions between software, hardware, and human actors as one of the characteristics that define all autonomous systems. Most conventional quantitative risk assessment methods rely on the separation principle, which make not work well for tightly coupled autonomous systems.

In response to this, Andrews et al advocate new modeling techniques which holistically capture the interdependencies in autonomous systems. While acknowledging the strengths of qualitative methods such as STPA, they see quantification methods playing a bigger role in the future.

In another workshop, Thieme and Ramos highlight the importance of risk assessment in decision-making and health monitoring, calling for the development of applied projects in quantitative risk assessment (QRA) for autonomous systems to highlight the advantages of QRA.

My Own Work

In my recently published paper, Toward a Hybrid Causal Framework for Autonomous Vehicle Safety Analysis, we systematically analyzed the AV safety challenges from the literature in order to identify common themes. We then used these common themes, along with the shortcomings of existing approaches, to inform the requirements for a new safety analysis framework.

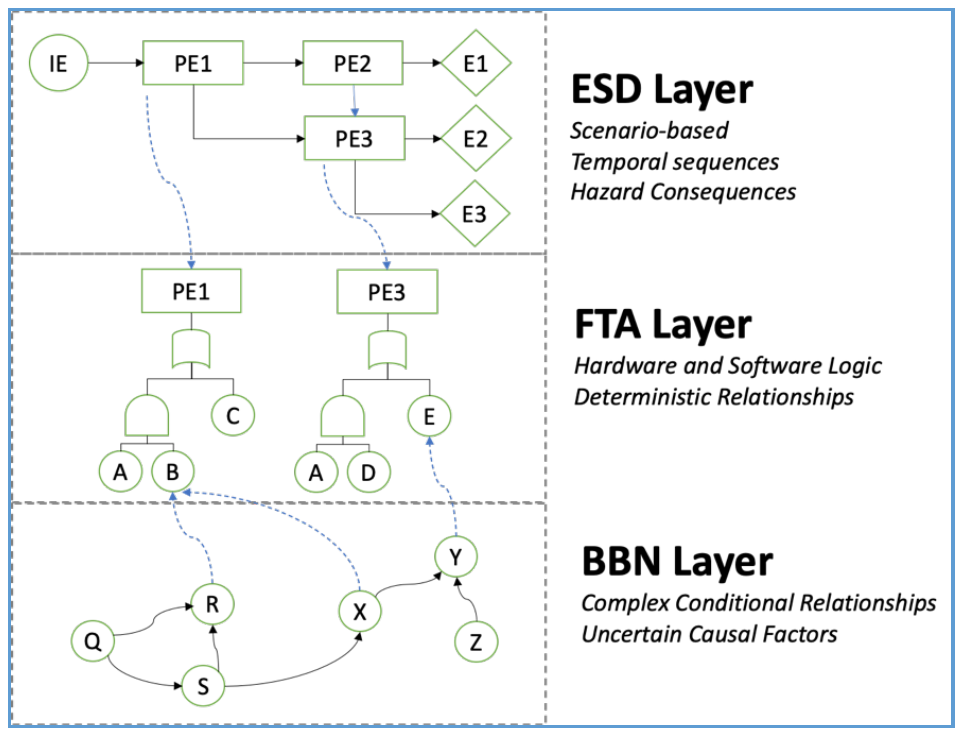

The proposed frameworks integrates temporal modeling (Event Sequence Diagrams), logical failure modeling (Fault Trees), and soft causal factor modeling (Bayesian Networks) into a cohesive quantitative model for AV safety. The power and flexibility of the HCL framework comes primarily from three aspects:

- The ESD layer allows clear probabilistic definition of complex operational scenarios (including multiple outcomes, random time delays, competing events, concurrent sequences, etc.)

- The BBN layer provides power and flexibility to accurately model complex dependancies and uncertainties (i.e. conditional probabilities, multi-state variables, imprecise probabilities)

- The HCL framework integrates the three layers so that the most appropriate modeling tool may be used for each part of the analysis

Authors Note: The above paper was originally supposed to be the first of three journal articles on this topic, but life got in the way… Please check it out anyway! HCL is a very flexible and powerful framework that I think has a lot of potential for autonomy. Here is a recent scholarly article on Hybrid Causal Logic for Autonomous Ships.

Thank You

Thanks for reading! Now that you know all of the AV safety challenges, time to get to work! Please check out some of our other popular posts, including:

Probably mobile machinery safety (including ISO 12100 and IEC 62061, as well as the IEC 61508 standards) will help facing the challenges.

A comment on “Challenges of ISO 26262”:

ISO 26262 uses the wording “malfunctioning behaviour” in its definition of safety. This very wording suggests that it’s indeed inclusive of environmental conditions and component interactions, unlike what’s mentioned in the article.

I think the all common understanding of ISO 26262 is a misinterpretation of the standard, as it’s not intended to exclude these considerations, and in no way analysis and certification according to it should consider only component “failures”